When National Public Radio radio host Gregory Warner visited Davos earlier this year, he discovered a dizzying world of ranks and stats, all designed to help guide investors and do-gooders. Representatives from India, Ukraine, Indonesia and other countries, he quickly realized, would pitch media and investors only about their top rankings, and omit or hide the negative ones. It was as if every country portrayed only its most beautiful self.

International organizations today spend an astonishing number of human hours and money creating global rankings. How useful are they in such a distorted field? What if those providing the data (the governments themselves) have an incentive to fudge or misportray them? And what do data-driven objectives like the UN Development Goals even mean, “if not a single one mentions the words individual rights, civil liberties, or democracy—even once?”

Alex Gladstein of the Human Rights Foundation recently asked some of these questions in The New Republic, pointing to several cases of dictators who would fake or misrepresent their countries’ stats and use them to mask or distract from repression. “Intellectuals and world leaders might do well to reflect on their worship of development numbers over human rights concerns,” he wrote.

As someone working for one of those organizations promoting international rankings, I find Gladstein’s observations extremely important, and many of his concerns valid. But I’d argue there is another side to this issue, too: Rankings can be a force for good, providing a start to policy conversations and a way to focus a government’s priorities.

It’s important though to recognize the validity of Gladstein’s arguments: After I read the piece, my wife, who is from Venezuela, reminded me of an award from the Food and Agriculture Organization of the United Nations her president Maduro collected in 2013 for reducing hunger in the country, based on data the regime itself had supported. It was maddening because many of its subjects were then struggling with food shortages, and continue to do so to this day.

But, in general, the shortcomings of rankings are well known by international organizations and statisticians—and preventing misuse is a number-one priority. I remember many nights my colleagues in Geneva stayed late at work as they tried to triangulate the thousands of data points they received; in the end, they often excluded countries from their rankings, precisely because the math didn’t add up. That’s a first good “check-and-balance” on their misuse.

Even questionable data, strangely enough, can be useful. As NPR’s Gregory Warner put it when surveying Davos’s data scene: “Even if rankings don’t actually tell us our real score, they may at least encourage us to play the same game.”

What this means is that rankings give governments a starting point to focus attention. And along the way, of course, they provide incentives for those who are driven by data or by competition with their past selves or neighbors. Ultimately, rankings and their spinoffs help provide roadmaps for improving what is measured.

After several years of measuring the Global Gender Gap, for example, the World Economic Forum’s Gender, Education and Work team was approached by some countries to help close their gender gap. Turkey, a poor performer, was one of the first countries to sign up for such a Gender Gap Task Force in 2012. There were plenty of speed bumps and lessons along the way. But when the task force concluded its work four years later, Turkey had managed to close its gender gap by more than 10 percent relative to its starting point in the World Economic Forum report. Turkey’s well-regarded Economic Policy Research Foundation (TEPAV) think tank also reported major gender gap improvements.

Often rankings also form the start of a national conversation, even without our active involvement. That was the case in the United Arab Emirates, where the vice president Sheikh Mohammed launched the Gender Balance Council in 2015, aiming to make the UAE a top-25 country for gender parity by 2021, as measured by the UN’s Gender Inequality Index. In that index, the Arab nation was ranked 42nd when its ruler decided to set up the council.

Similarly, while the Ethiopian government remains repressive—sometimes violently so—its pursuit of the UN’s Millennium Development goals has spurred multiple public health policies with tangible impacts. The goals are even credited with inspiring the country’s 2005 abortion law reform, which by many accounts—including those not coming from the government—has drastically reduced maternal mortality.

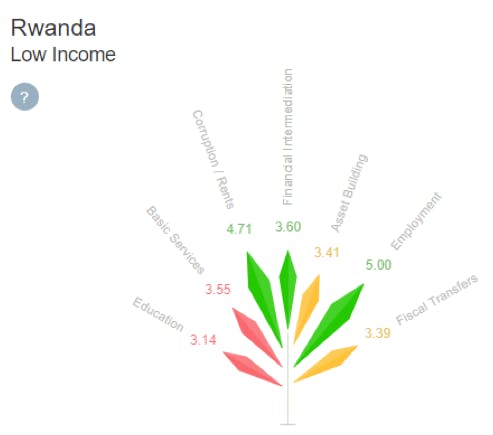

Statistics also don’t always need to be turned into rankings to drive change. Indicators can serve as a roadmap for improvement in and of themselves. The logic was incorporated in the World Economic Forum’s Inclusive Growth and Development Report, which debuted in 2015. Alongside a more traditional ranking, the report includes Policy and Institutional Indicators for Inclusive Growth (PII) that take into account crucial—yet, to date, often neglected—governmental policy considerations such as income inequality, intern-generational equity and environmental sustainability.

The PII organises and aggregates more than 100 of these indicators in a “key performance indicator” (KPI) dashboard, but stops short of publishing rankings. (The example of Rwanda is highlighted to the left.) This kind of scorecard de-emphasizes the potential prestige aspect, prioritizing the rankings’ use not as a way for a regime to paper over flaws, but as a tool for identifying areas for policy improvement.

Finally, there are

occasionally states that own their poor rankings. Saudi Arabia, as Gregory

Warner discovered, is not at all shy to own up to its low rankings on women in

the workforce, CO2 emissions, diabetes prevalence, or migrant rights. The

reason, the country’s statistician claimed, is that Saudi Arabia wants to

improve and can only do so by being “transparent.”

In the end, Winston Churchill’s oft-quoted words on democracy, now applied to everything from email to monogamy, may be just as apt for international rankings: “Many [ways to nudge countries into policy reforms] have been tried, and will be tried in this world of sin and woe. No one pretends that [rankings are] perfect or all-wise. Indeed it has been said that [rankings] are the worst [way to nudge countries], except for all those other forms that have been tried from time to time.”

Perhaps, then, that is the accurate way to look at rankings. They aren’t perfect, and it’s important that we be aware of their shortcomings. But in the end, they do more good than harm.