On January 26, 2008, Joseph Weizenbaum, a famed and dissident computer scientist in his eighties, ascended the dais in Davos for what would be one of his last public appearances. Onstage with the founders of Second Life and LinkedIn, Weizenbaum sulked through his fellow panelists’ talks about the promise of social networks, which at the time were still somewhat novel. “These people coming together,” said Philip Rosedale of Second Life, “are building essentially a new society in cyberspace.” Weizenbaum was not convinced. What effects might that virtual society have on the real world? In his long career, he had met many people who earnestly believed technology would soon alleviate humanity’s problems, and yet salvation always proved to be farther down the road.

This skepticism—which Noam Cohen highlights in his new book, The Know-It-Alls—is rare in Silicon Valley today, where large tech companies operate on the premise that they can both change the world and make a lot of money in the process. Facebook promises to make us more connected, Google to organize the world’s information, Twitter to democratize free speech and the public sphere, and Amazon to make retail an almost frictionless experience. Together with Microsoft and Apple, these companies are worth over $3 trillion. Their top executives are rewarded with millions in compensation and have acquired the power to shape culture and politics. Google was, for instance, a leading spender on lobbyists in the Obama years, and since the 2016 election, a delegation including Apple’s Tim Cook and Amazon’s Jeff Bezos has attended tech summits at Trump Tower and the White House, where they have discussed jobs, immigration, and infrastructure projects.

Tech leaders, Cohen writes, tend to “travel in Democratic Party circles but oppose unions, hate-speech codes, or expanded income redistribution.” Their companies sock away billions in offshore havens and funnel their European operations through tax-friendly Ireland. While preaching the values of freedom and independence, these firms collect vast amounts of information on their users, using this welter of data to form detailed dossiers and influence our behavior. Their products are organized less around improving lives than around occupying as much of their users’ attention as possible. Styling themselves as both pro-corporate and vaguely rebellious, “the Know-It-Alls” represent “a merger of a hacker’s radical individualism and an entrepreneur’s greed.”

Silicon Valley has not always nurtured these attitudes, nor do they inevitably result from the forward march of technology. “At crucial moments,” Cohen argues, “a relatively few well-positioned people made decisions to steer the web in its current individualistic, centralized, commercialized direction.” The Know-It-Alls is an attempt to trace the origins of Silicon Valley’s values, by examining some familiar figures—Mark Zuckerberg, Peter Thiel, Marc Andreessen, Sergey Brin, and Bill Gates—along with a few lesser-known personalities and their private motivations. How, Cohen asks, did we get to a point where a handful of companies and their executives rule our digital world?

In the early 1960s, the Santa Clara Valley was a prosperous region, best known for hosting a few big chip manufacturers that relied on government contracts. Computers were the domain of universities, big business, and the occasional well-heeled hobbyist. There was no software industry to speak of, and some room-sized machines still ran on punch cards. Not until 1971 did the area gain the name Silicon Valley, which the journalist Don Hoefler coined in an Electronic News article. The Valley itself was relatively bucolic, honeycombed with orchards and farms.

Many in the nascent tech industry didn’t foresee the rise of the personal computer and thought it laughable that people would want a small computer, with its puny processing power, in their home. As Leslie Berlin writes in her new history of Silicon Valley in the 1970s and ’80s, Troublemakers, some companies didn’t know how to market their inventions. Intel, for instance, began by pitching its microprocessor as a control device used for commercial and industrial applications—farm equipment, elevators, dialysis machines, irrigation. (Early microprocessors were also used in Minuteman missiles.) Software, too, was not considered something that the general public would pay for. Why would they, when it was freely traded among hobbyists?

Perhaps no story of missed opportunity is as widely mythologized as that of Xerox’s PARC research lab. Under the leadership of Robert Taylor, a former Advanced Research Projects Agency official who helped develop the early Arpanet, PARC was renowned for its research and development, which combined academic rigor with a vast corporation’s enormous cash reserves. PARC researchers helped design the Alto, an early minicomputer that became widely used within Xerox. But obstinate executives refused to start selling the machine to other companies and individuals, thus missing out on Xerox’s best chance to enter the personal computing market. PARC would later become famous for failing to capitalize on many of the innovations developed there, from graphical user interfaces to the mouse.

The inability of lumbering establishment firms to see opportunity sparked frustration, if not outright revolt, among some employees. Tracing the Valley’s history from the ’60s to the mid-’80s, Berlin describes the “generational hand-off” that took place when “pioneers of the semiconductor industry passed the baton to younger up-and-comers developing innovations that would one day occupy the center of our lives.” Many of the characters in Troublemakers work at large chip manufacturers or industrial concerns before alighting on some new idea that can’t be adequately commercialized at their current jobs. Longing for independence, they strike out on their own, perhaps recruiting another founder along the way.

In many cases, these defections—the courageous moments when a bootstrapping entrepreneur decided to break away from the comfort of an engineering job at Hewlett-Packard or IBM—acquire a mythos of their own. A group of Shockley Semiconductor employees who left to launch Fairchild Semiconductor were called the Traitorous Eight, while the other companies they eventually spawned, like Intel and AMD, came to be known as the Fairchildren. The malaise at Xerox drove a personnel exodus from the lab, and its alumni would go on to found companies like 3Com, Adobe, and Pixar. “We drank at the Xerox PARC well deeply and often,” said Don Valentine, an influential venture capitalist, speaking for the many companies who would benefit from PARC talent.

A central figure in the shift toward commercialization was Niels Reimers, a former naval officer who, after some time at two electronics firms, took up an administrative post at Stanford. There he would use his professional expertise, along with his connections with what some students had taken to calling the “evil forces”—the Pentagon and defense contractors—to cement Stanford’s place in the growing tech industry. At a time when many professors worried that commercializing their discoveries would compromise their academic integrity, Reimers showed no such hesitation. By pushing professors to patent their discoveries, he transformed Stanford’s Office of Technology Licensing, earning the school hundreds of millions of dollars and establishing it as a key incubator for startups. Among other advances, he helped usher recombinant DNA technology to market under the patronage of Genentech, a Stanford-affiliated startup that would grow into a biotech giant.

The arc of Reimers’s career, as told in Troublemakers, tracks the maturing relationship between Stanford and Silicon Valley. By the time Berlin’s book ends, the two cultures are nearly inseparable. Whole industries have been invented, from personal computing to biotech, while the area’s fruit trees have been bulldozed, making way for office parks for the likes of Atari, Apple, and Intel.

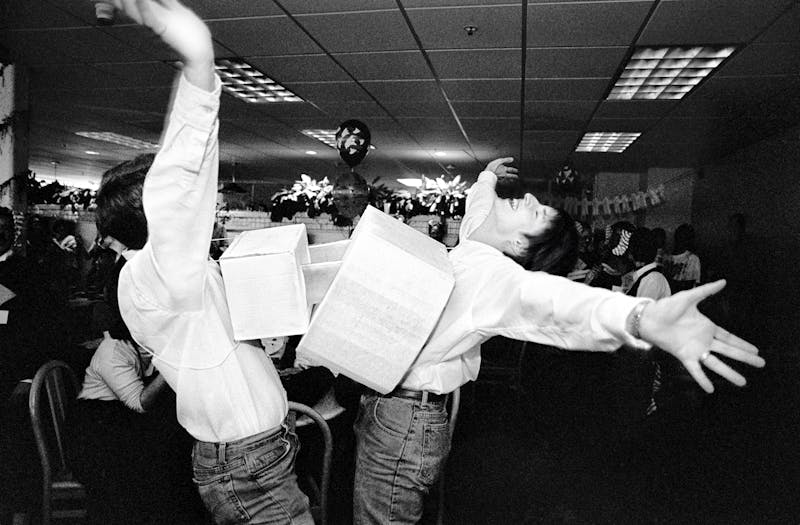

During this time, the tech industry was taking on many of the features that define it today. There is, for one thing, a deep undercurrent of sexism running through the industry in this period. We learn that early word processors were difficult to market to executives, who saw typing as coded female. The offices of the video game company Atari were less than professional, as founder Nolan Bushnell held meetings in hot tubs, and workers smoked weed on the production line. (Bushnell, who would later found Chuck E. Cheese’s, was also known to wear a T-shirt that said I LOVE TO FUCK.) A Warner executive who was considering acquiring the company worried that Atari was “a bunch of guys getting whacked every day and chasing women.” Sandra Kurtzig—the founder of ASK, for a time one of the largest software companies in the world—“was mistaken repeatedly for a ‘booth babe’ ” at an industry conference in 1976.

While Berlin acknowledges the backward gender dynamics at play, she doesn’t see them as a problem with the industry, so much as a product of the times. That would be a more convincing argument if tech were not still overwhelmingly male-dominated and in many cases hostile to women. Companies like Uber are only beginning to be called to account for their institutional sexism: In February 2017, former Uber engineer Susan Fowler wrote on her personal blog of her experience of sexual harassment at the ride-sharing app. In 2012, Ellen Pao sued the Silicon Valley–based venture capital firm Kleiner Perkins Caufield & Byers for gender discrimination, after being repeatedly passed over for promotion—and lost. Only 31 percent of Google employees are women and, according to an internal study that was leaked to The New York Times in September 2017, they are paid less than their male counterparts.

During the transitional period in Berlin’s book, Silicon Valley executives and programmers show little concern for the core values of American democracy. Even though Troublemakers is partly set in the Bay Area in the ’60s and ’70s—a hub of activism and protest—her entrepreneurs appear largely insulated from the period’s social and political ferment. A few oppose the war in Vietnam, while others are leery of accepting research funding from the Defense Department, though many still take it. “Defense-based industries, with their lack of price sensitivity, had driven technological change in Silicon Valley since before the Second World War,” Berlin writes, when agricultural companies began converting tractor treads into tank treads. In 1960, nearly 40 percent of Stanford’s budget came from the federal government, Cohen notes in The Know-It-Alls.

Economic ties were not the only reason many engineers stayed out of the era’s defining events. Some believed that politics was simply a distraction from the real work of technological disruption: Gordon Moore, one of the founders of Intel, said that engineers “are really the revolutionaries in the world today—not the kids with the long hair and beards.” Silicon Valley companies would go on to disrupt the nature of work, as web sites have replaced many bricks-and-mortar businesses, and apps like Uber and TaskRabbit offer short-term, insecure gigs instead of long-term, salaried employment.

From their earliest years, the companies Berlin profiles opposed labor protections, convincing workers that they were in fact better off on their own. Indeed, in her account, Berlin conjures an improbable world in which management and frontline workers lived in conflict-free bonhomie. As Fawn Alvarez tells Berlin while describing her life at ROLM, a company that manufactured ruggedized computers for the defense industry before moving into phone systems, “We had good chairs, good lighting. We could go to the bathroom without raising our hands. We had dignity. What could we get from a union that we didn’t already have?”

Perhaps unbeknownst to Alvarez, companies like ROLM actively engaged in union-busting tactics. “The four ROLM founders shared a dislike of unions, which they believed fostered adversarial relationships among people who should be working together,” Berlin gently notes. Industry organizations “offered legal aid to companies facing unionization efforts and multiday seminars for ‘companies that are nonunion and wish to remain so.’ ” Along with the offshoring of labor, these efforts would help keep Silicon Valley union-free. Meanwhile, the industry-wide cooperation involved would foreshadow more recent schemes in which top tech firms agreed to fix wages and not to poach one another’s talent.

Berlin’s history cuts off before she gets a chance to see how the influx of vast sums of money would undermine the idealism of many tech companies. For this, Cohen’s book with its focus on ’90s companies is instructive. Consider the story of Google, lately rechristened Alphabet. When they were Ph.D. students at Stanford working on early search-engine technology, Google’s founders, Sergey Brin and Larry Page, realized that the pursuit of advertising revenue could cause search engines to unfairly promote or bury some search results—a cell phone manufacturer would not, for instance, want its ad to appear next to an article on the dangers of mobile phones. It was a minefield of ethical entanglements and potential unintended consequences. “We believe,” they concluded, “that it is crucial to have a competitive search engine that is transparent and in the academic realm.”

The two young computer scientists had stumbled upon a thundering truth, and yet they didn’t heed it. In the mid-to-late ’90s, Stanford was a hotbed of entrepreneurship; there was already an on-campus search-engine startup in the form of Yahoo! Venture capitalists were sometimes funding students before they graduated. Brin and Page thought that they could be research-focused computer scientists while also using the might of big business to improve the human condition. As Page remarked, “I didn’t want to just invent things, I also wanted to make the world better.” By 1998, the offers were already coming in, as some of their professors urged them to seek outside funding. Andy Bechtolsheim, a founder of Sun Microsystems, was so impressed by a Google demo that he wrote Brin and Page a $100,000 check, before the company had even been incorporated. Several other outside investors were brought in (one of them was Jeff Bezos), along with an infusion of venture-capital cash.

Within a few years, Brin and Page caved and began developing the carefully targeted ad products that would soon turn the company into a Goliath. Rather than modeling transparency, Google became secretive in its practices, asking neither forgiveness nor permission when it decided—to name one example among many—to scan the world’s corpus of books. Even as Google tracked its users’ clicks, habits, and locations in a vast feat of surveillance, it continued to style itself as a civic-minded entity, providing information services to a world that increasingly saw being online as something like a human right.

The awkward fusion of market values and vague humanitarianism has become the defining feature of contemporary Silicon Valley ideology. In a paper published in 1970, John McCarthy, an influential computer scientist who was later a visitor to the Google campus, envisioned the boundless potential of networked communications, an à la carte system in which users would be free to trawl the sum total of human knowledge. As Cohen notes, “The only threat McCarthy could see to the beautiful system he was conjuring were monopolists.” Of course, McCarthy became an influence on one of the largest technology companies of them all, one that has frequently been deemed a monopoly for its dominance of search and advertising services. Google has been the subject of a spate of antitrust investigations in Europe, Australia, and the United States, and faces billions in potential fines for everything from mishandling of user data to burying results for competitors’ products—just the kind of behavior about which the company’s founders once voiced concern.

To get from the optimism of the ’90s to today’s surveillance-driven monopolies necessarily involves a bending of ideals. Alphabet has benefited from a strong institutional culture—its motto until 2015 was DON’T BE EVIL—that assures its thousands of employees their work is synonymous with human progress. It similarly reassures its users by projecting an image of public- spiritedness—the world’s knowledge, provided gratis by Google. And yet, an organization cannot vacuum up the world’s information, map its every street and house, and track the tastes and behaviors of billions of people without reproducing some offline biases and inequalities. Facial recognition algorithms have misidentified dark-skinned people as animals. Search results for traditionally black names have produced ads for bail bonds and background checks. Payday lenders use microtargeting to push their products to people who are precariously employed and vulnerable. The list of sins is extensive.

The promises of convenience and corporate benevolence may have so far limited much of the backlash against tech companies. Longtime Bay Area residents have for years protested the influence of big tech on their communities, where the price of housing stock has risen dramatically. Now the rest of the country is beginning to wake up, partly because of concerns over the way social media contributed to polarization and spread misinformation during the 2016 election. The tech industry now faces a level of congressional scrutiny not seen since the Microsoft antitrust actions of 20 years ago. Its executives have been blasted by congressional committees investigating Russian influence in the 2016 election. “You have a huge problem on your hands,” Senator Dianne Feinstein told representatives of Google, Twitter, and others in November 2017. “You have to be the ones to do something about it. Or we will.”

A refusal to reckon with things as they are, to speak in plain language, continues to dog conversations about the inequalities produced by the tech industry. The assumption that technology is inherently a force for good and that the social changes it brings are inevitable and irresistible contributes to the power of multinational corporations, discouraging their users from demanding better protections and more regulation.

Joseph Weizenbaum stood out among his fellow panelists at Davos because he used a different, more critical vocabulary. It seemed as if no one had ever said no to them before, much less attacked the pillars of their industry. He was used to being ignored—a colleague once accused him of being a “carbon-based chauvinist” because he had raised questions about the promise of artificial intelligence. What Weizenbaum wanted, it seems, was some acknowledgment that Second Life, and whatever virtual creations might follow, would always be a sideshow, secondary to the more important work of satisfying basic human needs.

On the stage in Davos, Weizenbaum pleaded the case for critical thinking. “We must acknowledge that mankind is a brotherhood and a sisterhood,” he told the audience, “and we should not exploit one another the way we are doing it.” He might as well have been shouting into a void. Silicon Valley has yet, it seems, to develop a theory of its own power, to fully grok its influence on our economic, social, and political lives. With few exceptions, much popular tech writing takes an overwhelmingly admiring approach to its subjects. Meanwhile, the academy is filled with blisteringly smart, well-sourced scholars of technology and communications who carry Weizenbaum’s humanistic torch—such as Latanya Sweeney, who has studied online discrimination, and Shoshana Zuboff, who has written on “surveillance capitalism,” a term she helped popularize. Like Weizenbaum, these critics find their expertise often overlooked. Who wants to stop and ask questions when the money’s rolling in?

When tech leaders prophesy a utopia of connectedness and freely flowing information, they do so as much out of self-interest as belief. Rather than a decentralized, democratic public square, the internet has given us a surveillance state monopolized by a few big players. That may puzzle technological determinists, who saw in networked communications the promise of a digital agora. But strip away the trappings of Google’s legendary origins or Atari’s madcap office culture, and you have familiar stories of employers versus employees, the maximization of profit, and the pursuit of power. In that way, at least, these tech companies are like so many of the rest.